Table of Contents

- Abstract

- Introduction

- Rationale for Use of Decision Models

- Selection of Topics for Decision Models

- Historical Incorporation of Decision Model Results in Recommendations

- Methodological Considerations

- Conclusions and Future Directions

- Copyright and Source Information

- References

- Appendix: U.S. Preventive Services Task Force Members

Abstract

The U.S. Preventive Services Task Force (USPSTF) develops evidence-based recommendations about preventive care based on comprehensive systematic reviews of the best available evidence. Decision models provide a complementary, quantitative approach to support the USPSTF as it deliberates about the evidence and develops recommendations for clinical and policy use. This article describes the rationale for using modeling, an approach to selecting topics for modeling, and how modeling may inform recommendations about clinical preventive services. Decision modeling is useful when clinical questions remain about how to target an empirically established clinical preventive service at the individual or program level or when complex determinations of magnitude of net benefit, overall or among important subpopulations, are required. Before deciding whether to use decision modeling, the USPSTF assesses whether the benefits and harms of the preventive service have been established empirically, assesses whether there are key issues about applicability or implementation that modeling could address, and then defines the decision problem and key questions to address through modeling. Decision analyses conducted for the USPSTF are expected to follow best practices for modeling. For chosen topics, the USPSTF assesses the strengths and limitations of the systematically reviewed evidence and the modeling analyses and integrates the results of each to make preventive service recommendations.

Introduction

The goal of the U.S. Preventive Services Task Force (USPSTF) is to provide evidence-based recommendations about the use of clinical preventive services in asymptomatic persons. The development of recommendations for preventive services typically requires deliberation using comprehensive systematic reviews of the best available evidence and a series of complex judgments. These include integrating evidence about benefits and harms from randomized, clinical trials and observational studies; assessing whether benefits outweigh harms and, if so, by how much and in which populations; assessing the degree of certainty the evidence provides for both benefits and harms; and determining ages and other risk factors needed to specify when to begin and stop offering the service and in which populations. The USPSTF assessment of the overall evidence for a clinical preventive service requires 2 key determinations: assessment of the degree of certainty about the net benefit (benefits minus harms) and assessment of the magnitude of net benefit. If the USPSTF determines that there is high certainty of a substantial net benefit, it gives the service an "A" recommendation. If there is high certainty of moderate net benefit or moderate certainty that the net benefit is moderate to substantial, the USPSTF gives the service a "B" recommendation. Tables 1 and 2 describe how the USPSTF assigns grades for recommendations based on the magnitude of net benefit and degree of certainty.

Decision models are a formal methodological approach for simulating the effects of different interventions (for example, screening and treatment) on health outcomes. Unlike epidemiologic models that project the course of disease or seek to make inferences about the cause of disease, decision models assess the benefits and harms of intervention strategies by examining the effect of specific interventions. The USPSTF has used decision models to aid in the development of recommendations for 4 cancer screening topics (colorectal, breast, cervical, and lung cancer)1-4 and 1 preventive medication topic (aspirin to prevent cardiovascular disease and colorectal cancer [CRC]).5 For these services, decision models allowed the USPSTF to consider the lifetime effect of different screening programs (for example, combinations of screening methods and intervals for colorectal or cervical cancer) in specific populations (that is, various ages for starting or stopping screening for breast, cervical, colorectal, and lung cancer) (Table 3).

This article outlines the history and rationale for the USPSTF's use of decision models and articulates its current approach to using these models. The USPSTF developed this approach from 2014 to 2015 to establish guidance on determining topics for which decision modeling would be useful in making USPSTF recommendation statements.

Rationale for Use of Decision Models

Modeling offers the potential to address questions that have not been or cannot be answered by clinical trials.6 Modeling is a potentially useful complement to the systematic evidence reviews prepared for the USPSTF to assess appropriate starting or stopping ages; compare alternative intervals of preventive service delivery; compare alternative technologies, such as different screening tests; assess the effect of a new test in an established screening program; quantify potential net benefit more precisely or specifically than can be accomplished based on the systematic evidence review alone; extend the time horizon beyond that available from outcomes studies; assess net benefit for populations and groups at higher or lower risk for benefits or harms; assess net benefit stratified by sex or other clinically important demographic characteristics, such as race/ethnicity or comorbid conditions; and assess uncertainty in estimates of benefits, harms, and net benefits.6-8

Of particular importance, models can enable the USPSTF to integrate evidence across the analytic framework and key questions in the accompanying systematic review. For example, the model for the USPSTF recommendations on aspirin for the primary prevention of cardiovascular disease and CRC helped integrate evidence across key questions from 3 systematic evidence reviews. The reviews discussed the effect of aspirin on cardiovascular and total mortality, the effect of aspirin in reducing the incidence of CRC, and the harms of aspirin.9

Whether the USPSTF uses models for a preventive service depends on the service under consideration, the state of existing empirical evidence, the suitability of models for specific purposes, and the resources available.

Selection of Topics for Decision Models

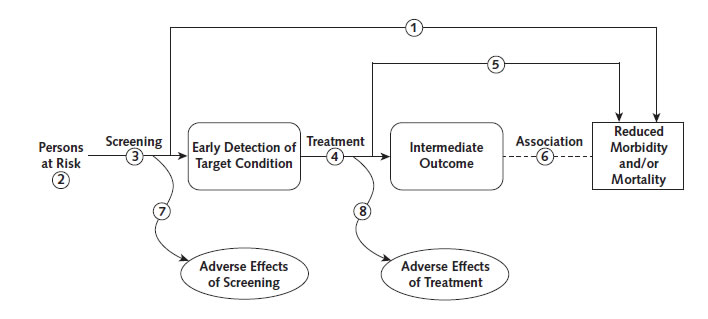

The USPSTF considers using decision modeling only for preventive services for which there is either direct evidence of benefit in clinical trials, at least for some populations, or indirect evidence of benefit established through the linkages in the analytic framework (Figure 1) based on the systematic evidence review.10 Direct evidence comes from a clinical trial that evaluates the preventive service and assesses health outcomes of importance to patients, such as disease-specific mortality, total mortality, or quality of life (Figure 1), step 1). An example is the NLST (National Lung Screening Trial),11 which showed that screening with low-dose computed tomography (CT) reduced lung cancer–specific mortality by 16%. Indirect evidence comprises evidence for a series of linkages that connect the intervention to health outcomes, with adequate evidence that the intervention changes health outcomes (Figure 1), steps 2 to 4). For example, the effect of HIV screening on mortality has not been assessed directly in clinical trials, but there is evidence that HIV can be diagnosed accurately and that early treatment reduces mortality and HIV transmission.12 In contrast, a recent assessment of screening for thyroid dysfunction found that the evidence was insufficient to assess benefits and harms; therefore, this topic would not be a candidate for modeling.13

Even with adequate direct or indirect evidence, the USPSTF uses modeling only if there are important considerations or uncertainty that empirical evidence has not addressed. Decision modeling is primarily warranted when there are outstanding clinical questions about how to best target an empirically established clinical preventive service at the individual and program level. In addition, modeling can help the USPSTF assess the net benefit of a preventive service when there are established benefits and harms.

For most A and B recommendations, the primary evidence is sufficient for the USPSTF to understand the net benefit and develop a recommendation; thus, modeling is not necessary. For example, for the USPSTF recommendations on smoking cessation, the evidence for the link between smoking and harmful outcomes was strong, and the evidence for the usefulness of behavioral interventions alone or in combination with pharmacotherapy to improve achievement of smoking cessation was convincing. Because the key questions could be answered by the evidence review, modeling was not needed.14

Prior decisions about using modeling for USPSTF topics have been made on a case-by-case basis. Because the number of topics for which modeling could be considered is expanding and resources for modeling are limited, the USPSTF determined that a more systematic approach for assessing when to use modeling as an adjunct to systematic reviews would be useful.

To help select and prioritize topics for modeling, the USPSTF developed a framework for deciding when and how it would incorporate decision modeling into the development of recommendations. This framework combines scientific considerations with process issues and also requires value judgments. The USPSTF proposed a set of 4 sequential questions to be answered in the early stages of planning when feasible, ideally during the research plan development phase. These questions attempt to quantify the appropriateness, focus, approach, and requirements of potentially incorporating a decision model with a systematic review for a specific topic. The process for addressing these questions is represented in Figure 2.

Addressing the 4 questions is intended to systematize the consideration of decision models and assist USPSTF leadership in prioritizing competing demands across its entire portfolio by determining when adding decision modeling to a review is both feasible and a high priority. A more detailed description of the approach is available in the USPSTF procedure manual.15

Topics with existing USPSTF recommendations based on relatively current systematic reviews may have a reasonably concrete set of questions that trigger an early decision to use decision modeling. This is particularly common when decision models informed the previous recommendation. For new topics, or those for which the evidence base for the efficacy of a preventive service is only beginning to become clear, additional time will probably be required before the desirability of decision modeling can be determined (Figure 1), step 3). In most cases, some degree of simultaneous activity will require coordination between the decision modeling and systematic review teams.

For topics for which modeling may be useful, an additional consideration is whether existing, published, model-based analyses are sufficient to address the needs of the USPSTF. Using existing analyses is faster and less expensive than commissioning new ones. However, identifying and evaluating existing models can be resource-intensive and may not yield a model that sufficiently answers questions of importance to the USPSTF. Commissioning new modeling enables the USPSTF to work with the modelers to better understand modeling results, ensure that the most current and applicable evidence is used, and evaluate questions that may not be addressed in the literature.

Decision modeling increases resource requirements for systematic reviewers and the modeling team. Coordinating systematic reviews and modeling to inform an evidence-based recommendation requires planning to ensure appropriate timing and integration as well as substantial investment to ensure consistency and clarity of results. Thus, modeling increases the resources required and may lengthen the time required to develop a recommendation.

The USPSTF leadership decides whether to use modeling for a topic after considering both the potential benefits of modeling and the available resources. Because modeling requires substantial additional resources and expertise, it is used selectively. Highest priority topics include those for which prior recommendations used modeling and those for which there are important additional questions that can only be addressed by modeling. To date, such questions have been the comparison of alternative strategies for screening (for example, screening for CRC and lung cancer) and the estimation of net benefit (for example, the benefit and harms of aspirin use for the primary prevention of cardiovascular disease and CRC).

Historical Incorporation of Decision Model Results in Recommendations

The USPSTF considers modeling results as supplementary analyses to systematic evidence reviews. As noted, the USPSTF uses modeling analyses only if the primary evidence is sufficient to make a recommendation. In general, the grades for a recommendation (A, B, C, and D and the I statement) are based primarily on assessment of the evidence in the systematic review rather than modeling. In complex cases, however, modeling can be useful in estimating the magnitude of net benefit based on analyses of relative benefits and harms of alternative screening strategies (for example, sigmoidoscopy vs. colonoscopy for CRC screening), different starting and stopping ages, and different screening intervals (for example, lung cancer). During recommendation development, a small working group assigned to the topic works closely with the evidence review and modeling teams to develop key questions (which are publicly posted), discuss interim results, and iteratively refine the questions and analyses. The results of the final evidence synthesis from the systematic review and modeling results are presented to the entire USPSTF during the meeting in which the recommendation statement is deliberated.

In addressing how to incorporate modeling results, the USPSTF assesses how well the model captures both the benefits and harms of the intervention and the strength of the evidence that supports the model. The USPSTF gives more weight to modeling analyses when the models fully capture the relevant benefits and harms and the underlying evidence is adequate for the analysis being done. To make such assessments, the USPSTF considers the model's structure, assumptions, and limitations. It also assesses the degree of concordance between the data and assumptions in the model and the systematic evidence review. For example, the modeling for the recommendations on CRC screening1 suggested that screening before age 50 years may be beneficial.16 However, because there are few empirical data on screening in adults younger than 50 years, the USPSTF gave less weight to the model-based analysis of screening beginning before age 50 years.

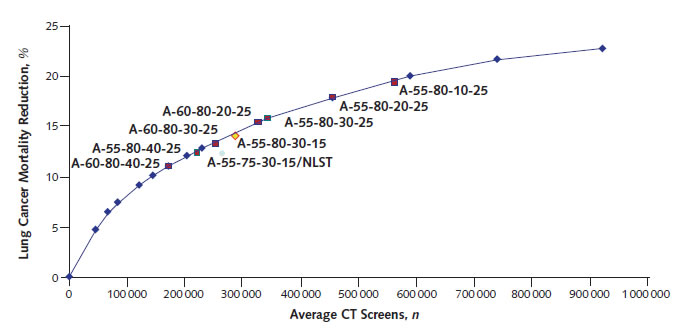

The modeling analyses for lung cancer screening,17 CRC screening,16 and aspirin for primary prevention9 are useful examples. The modeling analysis for lung cancer17 evaluated 576 possible screening programs for such factors as different starting and stopping ages, history of smoking (in pack-years), and years since cessation of smoking (Figure 3). The NLST enrolled adults aged 55 to 74 years at entry who had at least 30 pack-years of smoking and had quit no more than 15 years before entry. The trial followed patients for up to 7 years and thus had some who were older than 75 years at completion. As shown in Figure 3, the strategy that used the NLST entry criteria was slightly off the solid line, indicating that one could obtain the same benefit with fewer CT scans or more benefit for the same number of CT scans with strategies that were slight variations of the NLST criteria. These analyses helped inform the USPSTF about which criteria to choose for screening. Ultimately, it recommended screening adults aged 55 to 80 years with 30 packyears of smoking who had quit less than 15 years before, which is a strategy similar to that of the NLST but still on the efficient frontier.

The modeling analyses for CRC screening16 assessed the relative benefits and harms of alternative strategies, including colonoscopy, fecal immunochemical testing, and sigmoidoscopy. The analyses helped the USPSTF assess the relative effectiveness of different tests and provided insight about the effect of different screening intervals and starting or stopping ages. For example, the analyses indicated that sigmoidoscopy alone provides substantially fewer life-years gained than other methods.

The modeling analyses for aspirin use for the primary prevention of cardiovascular disease and CRC9 integrated benefits from reduced cardiovascular disease and CRC incidence as well as the harms of aspirin use. The analyses showed that aspirin use provides greater net benefit for adults aged 50 to 59 years than those aged 60 to 69 years. For example, aspirin use in women aged 50 to 59 years with a 10-year risk for cardiovascular disease of 15% resulted in 33.4 life-years and 71.6 quality-adjusted life-years per 1000 women, whereas for women aged 60 to 69 years, the corresponding benefits were 1.7 life-years and 32.4 quality-adjusted life-years. Benefit is reduced in older adults because the risk for bleeding with aspirin increases with age and because they are less likely to benefit from reduced CRC incidence given that this effect takes at least 10 years to become evident. These analyses informed the USPSTF's estimate of the magnitude of net benefit of aspirin use by age and were among several factors considered in assigning a grade.

The USPSTF methods work group is continuing to work on guidance about how to integrate model results and evidence reviews. As the USPSTF gains more experience with modeling, it expects to further modify and adjust its approach.

Methodological Considerations

The USPSTF expects its models to conform to recommended best practices.6, 7, 18-24 The population represented, intervention delivery and performance characteristics, adherence assumptions, choice of base case, and time horizon should fit the questions asked by the USPSTF and be clearly communicated to users of its recommendations. The USPSTF's approach of conducting modeling in conjunction with a systematic evidence review allows for a coordinated effort that brings the rigor of the systematic evidence review to the estimation of key model parameters. The modeling results should highlight assumptions, limitations, and implications of uncertainty in the analyses.

When both models and evidence reviews are used to inform recommendations, the USPSTF and other users of these evidence synthesis products should understand the consistency and differences in the methods and findings from the systematic review and the models. The USPSTF has identified several areas with the potential for difference. For example, the systematic evidence review evaluates efficacy (or effectiveness) over the duration of the clinical trials, whereas modeling studies often evaluate benefits over longer time horizons—perhaps the entire lifetime of persons who have the intervention or screening.

Coordination between the systematic review and modeling teams is important. Engaging modelers early in the scientific process can be useful,25, 26 but the most important questions for decision modeling can change (or do not become apparent) well into the systematic review process or after it is complete. If decision modeling is a high priority for a topic, the USPSTF must determine when it will be possible to define the objective and key questions for decision modeling.18,26 Systematic review results for some key questions may provide needed values or validation targets. However, in many cases, the systematic evidence review will not provide values that can be input directly into the models, and modeling teams may need to do their own literature review.

Conclusions and Future Directions

Decision modeling has been useful as an adjunct to systematic reviews for selected USPSTF topics. It has helped the USPSTF integrate evidence across key questions addressed in systematic reviews, compare alternative screening methods and strategies, and weigh harms and benefits of interventions. However, modeling also requires additional expertise and resources. National Academy of Medicine standards for evidence-based recommendation development27, 28 are already resource-intensive, and the addition of decision modeling further increases resource requirements and may prolong development of new or updated recommendations. The framework developed by the USPSTF15 will help determine when to use modeling as a complement to systematic reviews and builds on the foundation laid by others outlining the use of decision modeling in the development of clinical practice recommendations.6, 24, 29-32

More experience will help the USPSTF to further define strengths and limitations of modeling for recommendation development and more efficiently integrate systematic reviews and models. As the USPSTF gains experience with modeling analyses across a broader range of topics, the use of model-based estimates of net benefit may also facilitate comparison of benefits and harms across topics and recommendations. Such analyses may also be useful in discussions about the magnitude of benefit, and input from experts and stakeholders will help the USPSTF assess the usefulness of modeling for such comparisons.

Copyright and Source Information

Source: This article was first published in the Annals of Internal Medicine on July 5, 2016.

Financial Support: This article is based in part on a report by the Kaiser Permanente Research Affiliates Evidence-based Practice Center under contract HHS-290-2007-10057-I to the Agency for Healthcare Research and Quality (AHRQ), Rockville, Maryland. AHRQ staff provided oversight for the project and assisted in external review of the companion draft report.

Disclosures: Disclosures can be viewed at: https://rmed.acponline.org/authors/icmje/ConflictOfInterestForms.do?msNum=M15-2531. Dr. Henderson reports grants from AHRQ during the conduct of this work. Dr. Pignone reports having developed and revised decision models for preventive services (including aspirin to prevent cardiovascular disease and CRC screening) that are considered by the USPSTF. Dr. Maciosek reports grants from AHRQ during the conduct of this work. Authors not named here have disclosed no conflicts of interest.

References:

- U.S. Preventive Services Task Force. Screening for colorectal cancer: U.S. Preventive Services Task Force recommendation statement. JAMA. 2016;315:1-12. doi: 10.1001/JAMA.2016.5989.

- Siu AL; U.S. Preventive Services Task Force. Screening for breast cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2016;164:279-96. [PMID: 26757170]

- Moyer VA; U.S. Preventive Services Task Force. Screening for cervical cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2012;156:880-91. [PMID: 22711081]

- Moyer VA; U.S. Preventive Services Task Force. Screening for lung cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2014;160:330-8. [PMID: 24378917]

- Bibbins-Domingo K; U.S. Preventive Services Task Force. Aspirin use for the primary prevention of cardiovascular disease and colorectal cancer: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2016;164:836-45. [PMID: 27064677]

- Habbema JD, Wilt TJ, Etzioni R, Nelson HD, Schechter CB, Lawrence WF, et al. Models in the development of clinical practice guidelines. Ann Intern Med. 2014;161:812-8. [PMID: 25437409]

- Caro JJ, Briggs AH, Siebert U, Kuntz KM; ISPOR-SMDM Modeling Good Research Practices Task Force. Modeling good research practices—overview: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—1. Value Health. 2012;15:796-803. [PMID: 22999128]

- Sox HC, Higgins MC, Owens DK. Medical Decision Making. 2nd ed. Chichester, United Kingdom: J Wiley; 2013.

- Dehmer SP, Maciosek MV, Flottemesch, TJ. Aspirin Use to Prevent Cardiovascular Disease and Cancer: A Decision Analysis. AHRQ publication no. 15-05229-EF-1. Rockville, MD: Agency for Healthcare Research and Quality; 2015.

- Harris RP, Helfand M, Woolf SH, Lohr KN, Mulrow CD, Teutsch SM, et al; Methods Work Group, Third US Preventive Services Task Force. Current methods of the US Preventive Services Task Force: a review of the process. Am J Prev Med. 2001;20:21-35. [PMID: 11306229].

- Pinsky PF, Church TR, Izmirlian G, Kramer BS. The National Lung Screening Trial: results stratified by demographics, smoking history, and lung cancer histology. Cancer. 2013;119:3976-83. [PMID: 24037918]

- Moyer VA; U.S. Preventive Services Task Force. Screening for HIV: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2013;159:51-60. [PMID: 23698354]

- LeFevre ML; U.S. Preventive Services Task Force. Screening for thyroid dysfunction: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2015;162:641-50. [PMID: 25798805]

- Siu AL; U.S. Preventive Services Task Force. Behavioral and pharmacotherapy interventions for tobacco smoking cessation in adults, including pregnant women: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2015;163:622-34. [PMID: 26389730]

- U.S. Preventive Services Task Force. Procedure Manual. Rockville, MD: U.S. Preventive Services Task Force; 2015.

- Zauber A, Knudsen A, Rutter CM, Lansdorp-Vogelaar I, Kuntz KM. Evaluating the Benefits and Harms of Colorectal Cancer Screening Strategies: A Collaborative Modeling Approach. AHRQ Publication no. 14-05203-EF-2. Rockville, MD: Agency for Healthcare Research and Quality; 2015.

- de Koning HJ, Meza R, Plevritis SK, ten Haaf K, Munshi VN, Jeon J, et al. Benefits and harms of computed tomography lung cancer screening strategies: a comparative modeling study for the U.S. Preventive Services Task Force. Ann Intern Med. 2014;160:311-20. [PMID: 24379002]

- Roberts M, Russell LB, Paltiel AD, Chambers M, McEwan P, Krahn M; ISPOR-SMDM Modeling Good Research Practices Task Force. Conceptualizing a model: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—2. Value Health. 2012;15: 804-11. [PMID: 22999129]

- Pitman R, Fisman D, Zaric GS, Postma M, Kretzschmar M, Edmunds J, et al; ISPOR-SMDM Modeling Good Research Practices Task Force. Dynamic transmission modeling: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—5. Value Health. 2012;15:828-34. [PMID: 22999132]

- Karnon J, Stahl J, Brennan A, Caro JJ, Mar J, Mo¨ ller J; ISPOR-SMDM Modeling Good Research Practices Task Force. Modeling using discrete event simulation: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—4. Value Health. 2012;15: 821-7. [PMID: 22999131]

- Briggs AH, Weinstein MC, Fenwick EA, Karnon J, Sculpher MJ, Paltiel AD; ISPOR-SMDM Modeling Good Research Practices Task Force. Model parameter estimation and uncertainty: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—6. Value Health. 2012;15:835-42. [PMID: 22999133]

- Eddy DM, Hollingworth W, Caro JJ, Tsevat J, McDonald KM, Wong JB; ISPOR-SMDM Modeling Good Research Practices Task Force. Model transparency and validation: a report of the ISPORSMDM Modeling Good Research Practices Task Force—7. Value Health. 2012;15:843-50. [PMID: 22999134]

- Siebert U, Alagoz O, Bayoumi AM, Jahn B, Owens DK, Cohen DJ, et al. State-transition modeling: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force—3. Med Decis Making. 2012;32:690-700. [PMID: 22990084]

- Kuntz K, Sainfort F, Butler M, Taylor B, Kulasingam S, Gregory S, et al. Decision and Simulation Modeling in Systematic Reviews. Rockville, MD: Agency for Healthcare Research and Quality; 2013.

- Whitlock EP, Williams SB, Burda BU, Feightner A, Beil T. Aspirin Use in Adults: Cancer, All-Cause Mortality, and Harms. A Systematic Evidence Review for the U.S. Preventive Services Task Force. Evidence synthesis no. 132. AHRQ Publication no. 13-05193-EF-1. Rockville, MD: Agency for Healthcare Research and Quality; 2015.

- Ades AE, Caldwell DM, Reken S, Welton NJ, Sutton AJ, Dias S. Evidence synthesis for decision making 7: a reviewer's checklist. Med Decis Making. 2013;33:679-91. [PMID: 23804511]

- Institute of Medicine. Finding What Works in Health Care: Standards for Systematic Reviews. Washington, DC: National Academies Pr; 2011.

- Institute of Medicine. Clinical Practice Guidelines We Can Trust. Washington, DC: National Academies Pr; 2011.

- Saha S, Hoerger TJ, Pignone MP, Teutsch SM, Helfand M, Mandelblatt JS; Cost Work Group, Third US Preventive Services Task Force. The art and science of incorporating cost effectiveness into evidence-based recommendations for clinical preventive services. Am J Prev Med. 2001;20:36-43. [PMID: 11306230].

- Pignone M, Saha S, Hoerger T, Lohr KN, Teutsch S, Mandelblatt J. Challenges in systematic reviews of economic analyses. Ann Intern Med. 2005;142:1073-9. [PMID: 15968032].

- National Institute for Health and Care Excellence. Guide to the Methods of Technology Appraisal. London: National Institute for Health and Care Excellence; 2013.

- Sainfort F, Kuntz KM, Gregory S, Butler M, Taylor BC, Kulasingam S, et al. Adding decision models to systematic reviews: informing a framework for deciding when and how to do so. Value Health. 2013; 16:133-9. [PMID: 23337224]

Appendix: U.S. Preventive Services Task Force Members

Members of the USPSTF at the time this manuscript was finalized† are Kirsten Bibbins-Domingo, PhD, MD, MAS, Chair (University of California, San Francisco, San Francisco, California); David C. Grossman, MD, MPH, Vice Chair (Group Health Research Institute, Seattle, Washington); Susan J. Curry, PhD, Vice Chair (University of Iowa, Iowa City, Iowa); Karina W. Davidson, PhD, MASc (Columbia University, New York, New York); John W. Epling Jr., MD, MSEd (State University of New York Upstate Medical University, Syracuse, New York); Francisco A.R. García, MD, MPH (Pima County Department of Health, Tucson, Arizona); Matthew W. Gillman, MD, SM (Harvard Medical School and Harvard Pilgrim Health Care Institute, Boston, Massachusetts); Alex R. Kemper, MD, MPH, MS (Duke University, Durham, North Carolina); Alex H. Krist, MD, MPH (Fairfax Family Practice Residency, Fairfax, and Virginia Commonwealth University, Richmond, Virginia); Ann E. Kurth, PhD, RN, MSN, MPH (Yale University, New Haven, Connecticut); C. Seth Landefeld, MD (University of Alabama at Birmingham, Birmingham, Alabama); Carol M. Mangione, MD, MSPH (University of California, Los Angeles, Los Angeles, California); William R. Phillips, MD, MPH (University of Washington, Seattle, Washington); Maureen G. Phipps, MD, MPH (Brown University, Providence, Rhode Island); and Michael P. Pignone, MD, MPH (University of Texas at Austin, Austin, Texas).

† For a list of current USPSTF members, go to https://www.uspreventiveservicestaskforce.org/about-uspstf/current-members

Table 1. What the USPSTF Grades Mean and Suggestions for Practice

| Grade | Definition | Suggestions for Practice |

|---|---|---|

| A | The USPSTF recommends the service. There is high certainty that the net benefit is substantial. | Offer/provide this service. |

| B | The USPSTF recommends the service. There is high certainty that the net benefit is moderate or there is moderate certainty that the net benefit is moderate to substantial. | Offer/provide this service. |

| C | The USPSTF recommends selectively offering or providing this service to individual patients based on professional judgment and patient preferences. There is at least moderate certainty that the net benefit is small. | Offer/provide this service for selected patients depending on individual circumstances. |

| D | The USPSTF recommends against the service. There is moderate or high certainty that the service has no net benefit or that the harms outweigh the benefits. | Discourage the use of this service. |

| I statement | The USPSTF concludes that the current evidence is insufficient to assess the balance of benefits and harms of the service. Evidence is lacking, of poor quality, or conflicting, and the balance of benefits and harms cannot be determined. | Read the Clinical Considerations section of the USPSTF Recommendation Statement. If the service is offered, patients should understand the uncertainty about the balance of benefits and harms. |

Table 2. USPSTF Levels of Certainty Regarding Net Benefit

| Level of Certainty* | Description |

|---|---|

| High | The available evidence usually includes consistent results from well-designed, well-conducted studies in representative primary care populations. These studies assess the effects of the preventive service on health outcomes. This conclusion is therefore unlikely to be strongly affected by the results of future studies. |

| Moderate | The available evidence is sufficient to determine the effects of the preventive service on health outcomes, but confidence in the estimate is constrained by such factors as:

|

| Low | The available evidence is insufficient to assess effects on health outcomes. Evidence is insufficient because of:

|

* The USPSTF defines certainty as "likelihood that the USPSTF assessment of the net benefit of a preventive service is correct." The net benefit is defined as benefit minus harm of the preventive service as implemented in a general primary care population. The USPSTF assigns a certainty level on the basis of the nature of the overall evidence available to assess the net benefit of a preventive service.

Table 3. Purposes of Decision Modeling for USPSTF Topics

| Previous Recommendation | Purpose of Using Model-Based Analyses | Most Recent Recommendation | Incorporation of Modeling Results in Recommendation | ||||

|---|---|---|---|---|---|---|---|

| Topic | Year | Grade | Year | Grade | Reference | ||

| Colorectal cancer | 2008 | A recommendation | Assess screening method (e.g., colonoscopy, fecal occult blood test, and sigmoidoscopy) Assess ages at which to begin and end screening Assess screening interval |

2016 | A recommendation (C for ages 76–85 y) |

1 | Modeling identified sigmoidoscopy alone as the strategy with the least benefits. Caution added to recommendations. |

| Breast cancer | 2009 | B recommendation | Assess ages at which to begin and end screening Assess screening interval Assess potential benefits |

2016 | B recommendation (C for ages 40–49 y) |

2 | Modeling was useful in understanding benefits and harms of different screening intervals and starting ages. |

| Cervical cancer | 2003 | A recommendation | Assess screening interval Assess ages at which to begin and end screening Assess screening method (human papillomavirus testing, human papillomavirus and cytology testing, and liquid-based vs. conventional cytology) |

2012 | A recommendation (D for ages <21 y and >65 y) |

3 | Modeling was useful in comparing alternative screening strategies; it helped to identify cotesting with the human papillomavirus test every 5 y as an effective option. |

| Lung cancer | 2004 | I statement | Assess ages at which to begin and end screening Assess screening interval (1, 2, or 3 y) Assess eligibility for screening (pack-years of smoking history or years since quitting) Assess eligibility to stop screening (years since quitting) |

2013 | B recommendation for adults aged 55–80 y with a 30–pack-year smoking history and who currently smoke or have quit within the past 15 y | 4 | Modeling informed choice of criteria for screening (starting and stopping ages, years of smoking, and years since last smoked). |

| Aspirin use for the primary prevention of cardiovascular disease and colorectal cancer* | - | - | Integrate varying benefits and harms for subpopulations on the basis of risk prediction for cardiovascular disease Assess ages at which to begin aspirin use Integrate evidence on cardiovascular disease and prevention of colorectal cancer |

2016 | B recommendation for adults aged 50–59 y with a 10-y risk for cardiovascular disease ≥10% (C for ages 60–69 y) |

5 | Modeling was useful in estimating net benefit by age and sex; it informed age stratification and corresponding grades. |

* New topic.

Figure 1. Generic Analytic Framework for Screening Topics

Key Questions

1. Is there direct evidence that screening reduces morbidity and/or mortality?

2. What is the prevalence of disease in the target group? Can a high-risk group be reliably identified?

3. Can the screening test accurately detect the target condition?

a. What are the sensitivity and specificity of the test?

b. Is there significant variation between examiners in how the test is performed?

c. In actual screening programs, how much earlier are patients identified and treated?

4. Does treatment reduce the incidence of the intermediate outcome?

a. Does treatment work under ideal clinical trial conditions?

b. How do the efficacy and effectiveness of treatments compare in community settings?

5. Does treatment improve health outcomes for people diagnosed clinically?

a. How similar are people diagnosed clinically to those diagnosed by screening?

b. Are there reasons to expect people diagnosed by screening to have even better health outcomes than those

diagnosed clinically?

6. Is the intermediate outcome reliably associated with reduced morbidity and/or mortality?

7. Does screening result in adverse effects?

a. Is the test acceptable to patients?

b. What are the potential harms, and how often do they

Figure 2. Overall Process for Determining Whether to Add Decision Modeling to a Systematic Review in Preparing the Evidence for USPSTF Recommendation Statements

DM = decision modeling.

Figure 3. Estimated Lung Cancer Mortality Reduction From Annual CT Screening

Estimated lung cancer mortality reduction (as percentage of total lung cancer mortality in cohort) from annual CT screening, for programs with minimum eligibility age of 55 y and maximum of 80 y at different smoking eligibility cutoffs and NLST scenarios

(Reproduced from de Koning and colleagues17 with permission from Annals of Internal Medicine.)

CT = computed tomography; NLST = National Lung Screening Trial.

Current as of: August 2016

Internet Citation: Use of Decision Models in the Development of Evidence-Based Clinical Preventive Services Recommendations. U.S. Preventive Services Task Force. May 2019.